If you missed Autodesk CEO Carl Bass present his keynote at RTC USA you missed something wonderful.

First of all - he didn't wear of those wrap-around headsets. He leaned into the podium in a way that seemed to "ground" him (after having just descended from northern California) and began to present his case in a refreshing and relaxing manner.

Side note: on the topic of headsets, please allow me to say what everyone else is thinking: it's really hard to take anyone serious while they're wearing one. Madonna got really close and failed. Everyone else kinda looks like they escaped from a call center.

I'm trying...but I think my "come-hither" look is broken.

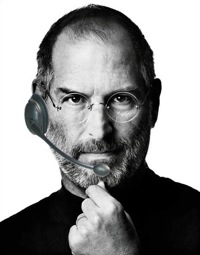

If only there were a rule of thumb. Well it turns out there is. When all else fails it pays to remember "WWJD": What Would Jobs Do.

Left Image: Look into my eyes...you need a new iPhone.

Right Image: Welcome to Olive Garden...table for two?

Second, Carl wasn't in a room of 1,000's of people, 1000's of feet away and on a giant screen. He was right there, in a room of 250 or so technology directors, managers and business owners. Was he "one of us"? No - don't kid yourself. As CEO of a +2billion company there's lot to be distracted about. But he was extremely gracious and approachable.

Finally, Carl's keynote was deeply sincere. He argued clearly and compellingly that we're rapidly entering an new age of computing and the implications for the design space are far reaching:

- The cost of compute is racing to $0.00.

- Computers are everywhere. There's even one in your pocket.

- Connectivity used to be a differentiator. Now it's a commodity.

Three key words: Infinite, Ubiquitous and Connected.

During his presentation, Carl presented at two very compelling examples specific to the AEC space (there were many more). Project Neon from Autodesk Labs allows Revit files to be rendered in the cloud (where compute is fast and nearly free) while allowing the designer to continue working on their design in Revit. Another example was an extension of Ecotect that allow wickedly fast CFD analysis that is accurate within 2%. And while engineering purists may turn up their noses at less than four nines of accuracy, Carl believes (and most of us will agree) they're missing the point: there's no such thing as "exactly" right when the design hasn't settled down. So a very close answer today is far more useful than an exactly right answer two weeks from now.

I like to diagram things and I came away from the discussion with something like the image below (originally sketched on my iPad with SketchBook Pro - another great Autodesk tool). Here's a more polished version:

The flow of design data: Infinite, Ubiquitous, Connected

In the above scenario, everything thing flows from the design teams that author the data. Presently, the data flows to other desktop applications used by other domain experts. This workflow should be pretty familiar to most of us; we use tools like Revit, Inventor and Civil3D to initiate the design, be it building, content or site. And as the design is authored, it's being leveraged by other tools that complete and validate the design ecosystem.

- For visualization, 3ds Max doesn't just render photo-realistically. It's used for material and geometry iteration - resulting in "emotive" visualization (with the addition of entourage that would quickly weigh down a Revit file).

- For analysis, Ecotect is leverages the BIM data for multiple tasks in a single application as well as online resources that truthfully connect your project to a specific location and orientation.

- To manage the project, Constructware allows the project team to centrally access project information from a web browser. This vastly improves version control, documentation, project communication and accountability.

- Navisworks is a whole suite of products focused on resolving construction. Just being able to assemble all the different design files in one place is a hurdle in itself. But beyond that, the teams can resolve physical as well as time-based project clashes.

- Finally - the building is going to be operated for many years. In this arena, I think ADSK would be the first to admit that there's no one right answer (or product) that has emerged. Some want to continue to leverage Navis for this role (after spending so much time aggregating the design data and geometry). Others want to leverage the initially authored design files (Revit) for this task. There's strong opinions on both sides.

I've just highlighted a few tasks and tools that fall into big arenas of design validation - so there's going to be lots of debate about the particulars. But while we may disagree on the tools and granularity, most of us probably recognize the process:

- Author the design data with one set of tools

- Validate the design data with another set of tools

But according to Carl, this familiar process has already started to change. Rather than move information from desktop to desktop, it'll move from desktop to cloud - where far more compute intensive resources wait to give us really useful answer as quickly as possible, at less cost, and without tying up the desktop.

So what's not to like?

Well first of all, it's something that I've been thinking about for a long time (not just after Carl's presentation). I've been thinking about this almost as soon as I began implementing Revit. And I'm not going to contradict Carl's well-considered presentation. He's on the right track.

However, I would like to tweak it slightly - in two key areas. A few blog posts ago I discussed how Revit (at least when it wasn't first released) wasn't the best at anything. But it excelled in two areas: design information was concurrent and it was integrated.

So the first tweak has to do with the notion of truly useful information being "Concurrent". I admit, the idea of concurrence is deeply tied to Carl's notion of "infinite" computing, so you might thing I'm being overly pedantic. But I think it's an important distinction that should get it's own bullet point (and since I'm only adding two bullet points they all still end up on one hand at the beginning of a presentation).

For information to be really useful, it has to arrive in as near-time or real-time as possible; this goes for news, pregnancy tests, stock quotes - and somewhere on this long list is design analysis. And the "compute" of analysis can happen really quickly in the cloud. But in practice, significant time gets dedicated to preparing the models for analysis. It's not unusual to spend days (and sometimes weeks) preparing models that eventually take a fraction of the time to analyze. Geometry has to be exported, uploaded, downloaded, cleaned up and simplified prior to analysis. And while this manual task is very important it's not often very interesting.

The second tweak has to do with the importance of "Integration". I'm the first to admit that Revit wasn't the best on a feature to feature comparison (and there's still room for improvement). But on the other hand, using Revit meant the design team wasn't running off in separate silos (CAD, Excel, Max, etc) to do important work. Revit allowed the design authoring team to work from the same version of the 'truth'.

So what's the next big inflection point? Well, I believe Carl is correct. The present paradigm involves moving lots of what's currently on the desktop to the cloud. And that's an important next step even if domains and tools are still "disconnected". Stuff can happen faster.

But I think the process is about to inverse. Here's a more polished version:

The bit flips: Concurrent Integration

If this reminds you of what happened with Revit, I don't think you'd be alone. In this scenario, the design geometry and data isn't being decoupled as it's sent to different domain experts for validation. Furthermore, the 'truthfulness' of any one result can be as concurrent as any other result since we're all working from the same version of the truth.

Separate plan, section, elevation schedule and documentation was once considered a viable business model for representing a building. But this is no longer the case as BIM represents what some believe to be the new "minimum standard of care" (just ask your doctor or lawyer friend of the implications of that term in the medical space). So now that BIM has reshaped our business in the realm of authoring design data, what keeps separate efforts of visualize, analyze, strategize, construct and manage from being similarly integrated?

If you're a successful, existing company, you don't just have to start. You have to start over. This is an incredibly important distinction.

Existing, separate tools and applications can't just "evolve" into a single toolset. And if you're a large, successful and established software company focused on the AEC space, you already have an existing toolset and org chart that develops, markets, sells, supports, etc. first in class tools that are (unfortunately) disconnected. The challenge isn't just starting. The challenge is starting over.

And starting over is nearly insurmountable due to cost and culture.

On the other hand, if you're a new company, starting over is a call to action! Fly the pirate flag! Existing customer dissatisfaction is like water in the desert. Every point of friction that is practically impossible to address within an old process is welcomed news to this new effort. It's an opportunity to not merely improve a bad process, but to make that bad process obsolete.

So integrate the design validation, construction and management space. That's just rendering existing processes obsolete. What's the real "blue ocean"?

There are three (and like more) incredibly valuable benefits in the AEC space when sum becomes greater than the parts.

First: Best of Breed. In the first diagram, the authoring tools tend to work really well from within a single ecosystem. Autodesk, Bentley, Graphisoft, etc. all have similar ecosystems to validate AEC design data. And those companies should rightfully look after their own interests first. But the second diagram illustrates a potentially agnostic environment where the design data from multiple platforms (maybe even different versions within the same platform) aggregate agnostically. And the AEC space is really, really hungry for best of breed solutions in the BIM space. And while everyone is busy trying to resolve standards for "interoperability" between design platforms, it doesn't seem like anyone has scratched the surface of agnostic integration in order to concurrently validate visualization, analysis, scheduling, construction and ultimately facility management.

Second: Multiuser Access. We've been alluding to this - but I think needs restating. The majority of these existing stand alone validation tools aren't multi-user environments. So it's very difficult for the team focused on design validation to compare results across presently siloed domains. In other words, my great construction sequence can't influence your emotive visualization. One person's energy analysis can't impact another persons clash detection. And it shouldn't be the job of one person to resolve construction sequencing anyway - the domains in charge of assembling the real building need to get to their part of the virtual building at the same time. And facility management? That data repository must contain geometry, data and be concurrently accessible by a very large group.

Third: Mass Customization. An agnostic platform for validating design data becomes the de-facto platform for developing a multitude of compelling, idiosyncratic but ultimately incredibly useful and valuable applications. Suppose you want to create an upstart analysis tool for a particular contractor in a particular city for a particular building type. That's mass customization - and at present it's nearly impossible in the AEC space. Where to begin? Which platform to choose? Which version? Which OS? But if there were a third way that allowed your team to leverage centralized, agnostic geometry and data?

And if that environment were Infinite, Ubiquitous and Connected - as well as Integrated and Concurrent?

Well, you wouldn't have invented a better way.

You'd have created an App Store for AEC. ;)

1 comment:

Thanks for the thoughtful insight Phil. I concur infinitely ;)

Post a Comment